So, everything is metadata driven these days, this concept is no longer new. This is especially true in the land of IOT where you have wildly varying sources of data, of all shapes and sizes.

That leaves an age old problem though – how do you populate your metadata? If your data consists of 1,000’s of different values this is a significant issue.

Well in the olden RDBMS days, there’s a simple yet powerful plugin from Roland which exposes the JDBC metadata directly to PDI:

https://github.com/rpbouman/pentaho-pdi-plugin-jdbc-metadata

However; Despite years of promise of self describing systems, the horrendousness that was WSDL and more, we’ve actually gone backwards, and data is now going simpler and back to basic formats.

That may be as basic as a text file – in which case there is also a plugin to help you – the file meta plugin – this scans your text file and makes an attempt to guess:

- separator

- column headers

- data types

Obviously it can only ever be a guess, but it’s better than nothing. Maybe you’ll even have a spec, and maybe that spec will be up to date (lol, OK sorry, i know, that’s not going to happen.)

It’s possible you have an XML data source, perhaps for a more elderly system, or more likely these days we’re looking at JSON.

In the PDI XML world the XML input step is pretty mature. It’s easy to use with a great get nodes, and get paths button. Couldn’t be simpler. Kinda sad though that the step matures, just as the format becomes obsolete..

In the json world, it’s not so good. The step is less mature and therefore doesn’t have these nice config features. So given a json you know nothing about, what to do? Well the answer is to hit stackoverflow, grab a snippet of code to give you all the jsonPaths in a json file, and execute it in PDI! See the samples here (On a positive note, the performance of the json input step in pdi6+ is now up to an expected level)

So great, we’ve understood what’s in our 1000s of input files. Job done.

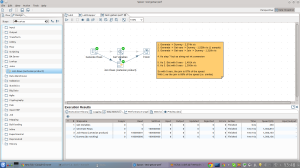

The use case is more nuanced than that. Sure you can then use this scan to initialise your metadata repository, which in turn then configures your transformation via metadata injection of the json input step. (A PDI7 feature btw). But actually you can use this scan to check if the structure of your incoming files is as expected. You can diff the attributes and if you have new keys, add them to your metadata library. If you have missing keys you can raise an alert. It’s important to *look* for change and deal with it rather than waiting to be notified of it. The latter will never happen!